Dear Darren,

You probably won’t remember me, but that’s OK. After your recent Move Fast. Fix things speech I just wanted to take the opportunity to jog your memory about the excellent work that the APPG on Data Analytics did a few years ago on highlighting not just the opportunities presented by machine learning and generative AI, but also potential risks and possible mitigations.

In case it’s useful, here’s a handy link to the inquiry homepage and the inquiry’s findings:

Two findings from the APPG’s inquiry are perhaps even more relevant now than when the report was published in 2019:

-

The need for a public services licence to operate - the report describes this as transparent, standardised ethics rules for public service providers (universities, police, health service, transport) to build for public confidence.

-

The need for Parliamentary scrutiny to ensure accountability - i.e. built in to systems and processes, rather than bolted on retrospectively in response to a scandal or a tragedy.

As you and Lee wrote in the foreword to the report:

Data-driven technologies are revolutionising the way important decisions are made every day. From our treatment in hospital to students’ educational experience; from how we travel to the way our society is policed; we are increasingly reliant on data to enable modern life. However, these developments have not always been accompanied by openness and accountability about the use of our information. This lack of transparency has led to a growing distrust and suspicion about data and algorithm-based decisions, concerns which must be overcome if we are to exploit the many positive ways that data-based technology can improve people’s lives.

In your Move Fast speech you called for nothing less than a complete digital transformation of the state. In that context, it’s incredibly important that these issues of transparency and accountability are addressed.

Who Will Watch The Watchers? #

Just consider the White Paper on policing reform, which states that:

The number of live facial recognition vans will increase five-fold, with 50 vans available to every police force in England and Wales to catch violent and sexual offenders.

The government will also roll out new artificial intelligence (AI) tools which will help forces identify suspects from CCTV, doorbell and mobile phone footage that has been submitted as evidence by the public.

A new national centre on AI – Police.AI – will be set up to roll out AI to all forces to free officers from paperwork, delivering up to 6 million hours back to the frontline every year – the equivalent of 3,000 police officers. This means more police on the streets fighting crime and catching criminals.

As the Home Office acknowledges, we know from numerous studies that there are massive issues with accuracy and algorithmic bias in areas such as facial recognition and predictive analytics aka “pre-crime” policing. It would be reassuring to hear that initiatives like the ones mentioned in the White Paper will include checks and balances to ensure that the efficacy of these technologies is critically evaluated. The disadvantaged and marginalised communities that have been shown to be most at risk would particularly appreciate this.

Similarly, people having a Ring doorbell put in probably weren’t thinking too much about law enforcement access to their camera footage. And yet in the United States we have already seen these technologies exploited for oppressive purposes - enabled, however inadvertently, by technology providers like Flock and Amazon. As with Palantir’s involvement in the MoD and the NHS, we should be asking ourselves whether this is actually a good idea, and who we are really getting in to bed with. Crucially - what is our threat model if a fascist regime should come to power in the UK?

Quit Karping #

It would be remiss of me not to highlight that Palantir insist they are in fact jolly nice and extremely trustworthy, even going so far as to say:

To be absolutely clear, Palantir is not working on any master database project to unify databases across federal agencies. Palantir has not proposed the US Government build a “master list” for the surveillance of citizens, nor have we been asked to consider building such a system for any customer.

Furthermore, Palantir founder and CEO Alex Karp is on the record as saying:

Technology should be built in a way that can be transparent.

So that’s all good. I for one look forward to hearing more about this radical transparency which Alex is so keen on. Perhaps it will be like the moment in Sneakers (1992) when the protagonist Marty Bishop discovers that…

You never know with Alex! He’s a bit of a character, as they say…

Amazon’s Ring tells us that it is completely untrue that the much-reviled ICE has access to the footage from its doorbell cameras. However, they do mention in passing that local law enforcement access is an entirely different matter. Cool cool cool. But hey, it’s neat to be able to see who is at the door from the comfort of your armchair, and whether they are wearing body armour.

But that is all half a world away, at least for the moment.

The Doctor Will Scribe You Now #

According to i.AI, the UK government’s Incubator for Artificial Intelligence:

Across the public sector, millions of conversations drive essential services in moments that matter: a social care assessment, a hospital appointment, or a meeting with a probation officer. These interactions are where needs are understood, risks are assessed, and decisions are made that can affect someone’s life.

There has been a lot of talk about using genAI tools to cut down public sector workloads by doing things like transcribing the audio from interviews and appointments, summarising documents and even making decisions about benefits eligibility and medical diagnoses. Is this is part of a controlled trial? Well, kinda sorta. The mood music is very much that this is all inevitable and unstoppable, rather than let’s try a bunch of stuff and see what works whilst also (crucially) going to extraordinary lengths to minimise possible harms.

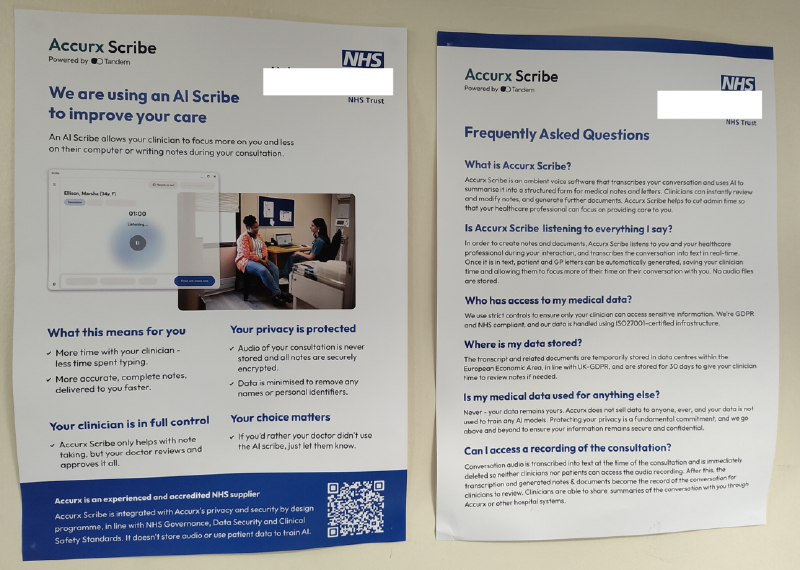

As a case in point, I recently attended a clinic where all of the appointments were being transcribed using an AI tool, but I would have been completely unaware of this had I not happened to spend a few minutes reading the posters on the walls of the clinic while I was waiting to be seen.

| I have so many questions | And also answers |

|---|---|

| Should my consultant or the appointment letter have mentioned the use of an AI transcription tool which was listening to our conversation? | Yes. |

| Should I have been offered the opportunity to decline? | Also yes. |

| Should I have been advised about the use of the tool and asked for my feedback on the accuracy of the AI-assisted doctor’s notes? | Absolutely, but I wasn’t. |

| Should I have been told where the audio recording of our conversation was being sent and who might have access to it? | Oh hell yeah. |

| Should my NHS Trust have a public page where they describe this initiative and report back on its accuracy and effectiveness? | Very much so. |

No Thoughts, Only Hallucinations #

Generative AI tools like Large Language Models are inherently non-deterministic. They will literally produce a different result every time you use them, and they will always be prone to “hallucinations” because this is what they do. Everything is a hallucination, but if enough of them seem plausible then we tend to disregard this.

If you want to be disabused of the supposed power and brilliance of genAI, try asking some questions about a subject that you know very well. And then keep asking “are you sure?”, however confident the bot initially claims to be. The results may surprise you.

And when it comes to machine learning techniques for predictive analytics in aspects of our lives that can have profound and life-changing consequences for the individuals involved, recall that crime prevention approaches which build on prior data about crime rates in neighbourhoods have been shown time and time again to reinforce structural discrimination such as racial profiling.

There is no pre-crime division.

Yet.

And maybe, just maybe, there never should be.

With my very warmest regards,

Martin Hamilton